If you missed the first part of this series, you can click here to see it: https://krisfeher.com/how-to-set-up-cicd-pipeline-on-aws-using-bitbucket-ecs-ecr

to continue.... :

1. Setting up EC2

cluster

Let's set up an ECS cluster to host our application. If you have this already available you can skip this step and jump to the next one.

In ECS click on create cluster, and in the infrastructure section you can set what EC2 would you like your docker host to be.

The network configuration will be already filled in with all your subnets and VPC, but you can customize it if you want.

Select the defaulted public subnets.

What this will do is create an EC2 along with an autoscaling group that scales your EC2 (with default dynamic scaling) and a launch template which is just a blueprint for your EC2.

Your next step should be to modify the auto-scaling group, as it's totally wrong 🙂

A few things we need to create a new launch template versionEC2 => Launch templates => Modify template (Create new version)

pick an AMI that's ECS optimized. Search for "ECS" within the AWS Marketplace AMIs, and pick the operating system you wish. Here's the one I used for Amazon linux in us-east :

ami-04d4dd7b34e293332change the user_data: Open up the "Advanced" section and dunk this script in, where "test-cluster" if the name of your cluster. If it's already there, happy days.

#!/bin/bash echo ECS_CLUSTER=test-cluster >> /etc/ecs/ecs.config;

There're a few things that need to happen in order to have an EC2 attach to an ECS cluster, namely:

user data with the cluster name as above

ECS agent installed, which is included in the AMI

linked to capacity provider, which is done by AWS when you created the cluster

IAM instance profile for ECS agent, done as well by AWS

Outgoing security groups to provide communication, done as well.

I'll provide a terraform template later on that includes all these.

2. Setting up ECS

task definition

This is a blueprint for our that defines containers configurations(Docker image to use, CPU and memory requirements, environment variables, and networking settings).

To create one, within ECS on the left hand menu click on "Task definition", then give it a name. You can fill in each section as below:

Infrastructure requirements

You can leave most of this default.

Only a few to change:

- Launch type is EC2

- network mode is bridge (this is important, as awsvpc doesn't support dynamic port mapping)

- (optional) Task size: 512 MB (we'll not require much memory for this)

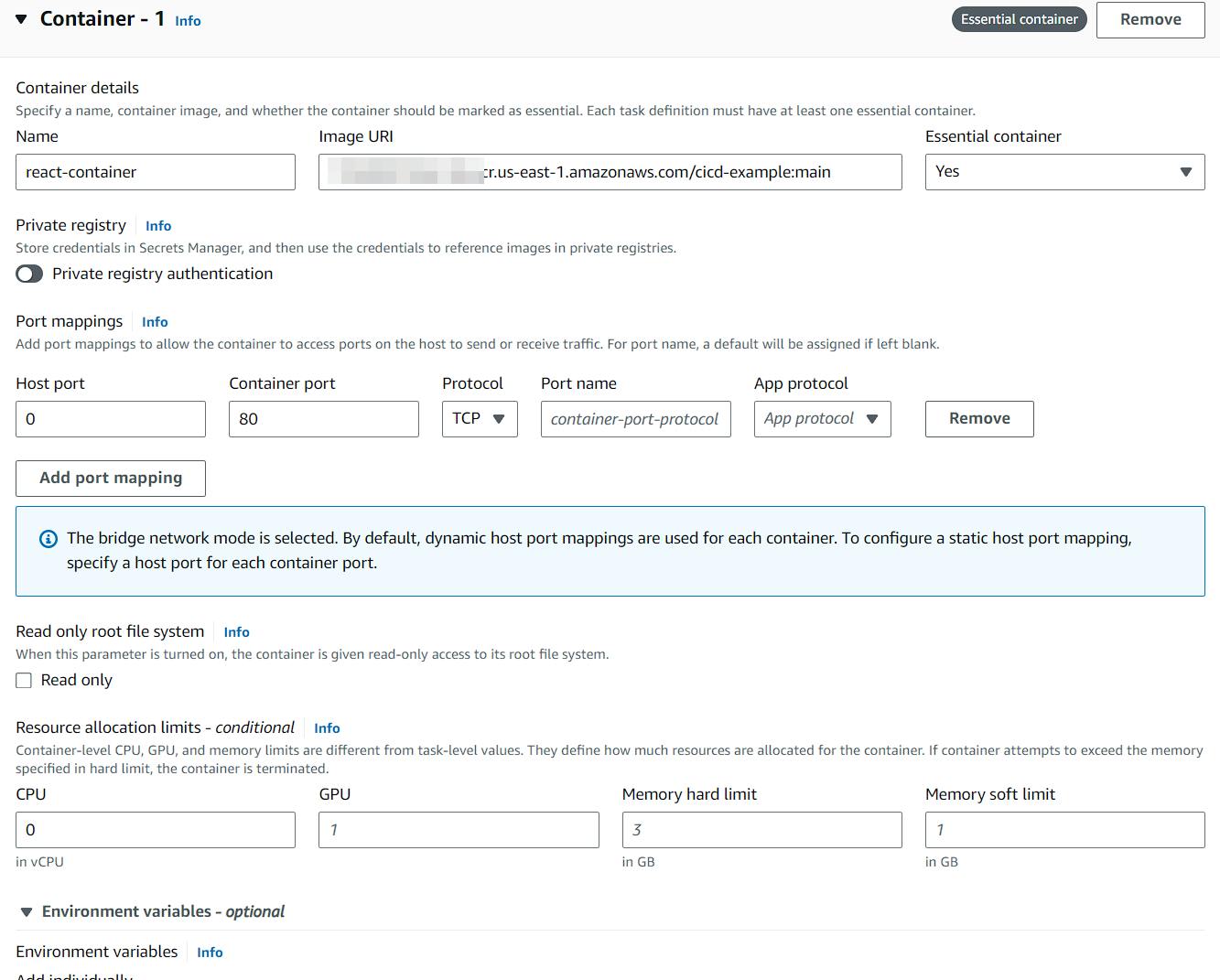

Container

Some of the changes I've made:

Add a name and the ECR image URL.

host port: 0 (this is to indicate dynamic port mapping)

Once done, click create and it'll create revision 1 of your task definition.

Again, I'll provide a TF template for this.

Service

The next up is the service. Why not a task you may ask?

A Task Definition outlines container requirements, such as Docker image, ports, resources, and environment variables; a Task runs the defined containers, suitable for short-lived jobs, and is not replaced automatically if stopped.

A Service ensures a set number of Tasks are constantly running, replaces failed Tasks, can balance them across resources and zones, and can be configured with a load balancer, unlike standalone Tasks.

For our purpose, a service is better.

Here're the sections one-by-one:

- environment

Choose the default capacity provider strategy we created earlier.

- deployment configuration

Give a name to your service, add the task definition we created earlier and select "Replica" as the service type.

You can then select how many tasks (clones) you want to run on this service. For the sake of simplicity I selected one.

- load balancing

This is an important one. Make sure you select your current load balancer, and the target group you defined above. If you're unable to do so "it's greyed out, or not available", then make sure you have the following:

your target group exists, does not have targets, and is an instance type target group

your target group is assigned to the load balancer, within the correct VPC

your task definition includes "bridge" networking

your container definition includes host port of 0

The rest of the options you can leave it as default. There's a lot more to pick here, but we'll not delve into those options.

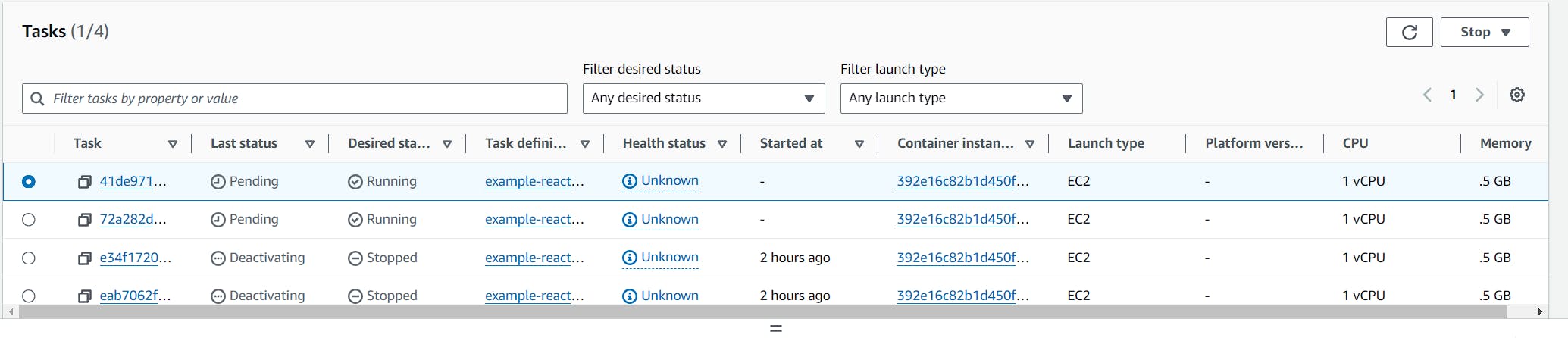

Once this is done, you can see the tasks provisioning for the service (depending on how many task you picked earlier):

3. Terraform templates

To provide you with a terraform templates:

resource "aws_launch_template" "EC2LaunchTemplate" {

name_prefix = "ECSLaunchTemplate-"

image_id = "ami-04d4dd7b34e293332" #ECS-OPTIMIZED AMI

instance_type = "t3.medium"

key_name = aws_key_pair.sshkey.key_name

iam_instance_profile {

arn = aws_iam_instance_profile.ecsInstanceRole.arn

}

vpc_security_group_ids = [aws_security_group.ecs_sg.id]

user_data = base64encode("#!/bin/bash\necho ECS_CLUSTER=${var.cluster_name} >> /etc/ecs/ecs.config")

}

resource "aws_key_pair" "sshkey" {

key_name = "ssh-key"

public_key = "add your own SSH public key here you generated on your PC"

}

resource "aws_autoscaling_group" "AutoScalingGroup" {

name_prefix = "ecs-asg-"

min_size = 1

max_size = 2

desired_capacity = 1

launch_template {

id = aws_launch_template.EC2LaunchTemplate.id

version = "$Latest"

}

vpc_zone_identifier = [aws_subnet.public_subnet_1.id, aws_subnet.public_subnet_2.id]

health_check_type = "EC2"

}

resource "aws_iam_role" "ecsInstanceRole" {

name_prefix = "ecsInstanceRole-"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

Service = "ec2.amazonaws.com"

}

}]

})

}

resource "aws_security_group" "ecs_sg" {

name_prefix = "ecs-sg-"

vpc_id = aws_vpc.my_vpc.id

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

}

resource "aws_iam_instance_profile" "ecsInstanceRole" {

name = aws_iam_role.ecsInstanceRole.name

role = aws_iam_role.ecsInstanceRole.name

}

resource "aws_iam_role_policy_attachment" "ecs_role_policy" {

role = aws_iam_role.ecsInstanceRole.name

policy_arn = "arn:aws:iam::aws:policy/service-role/AmazonEC2ContainerServiceforEC2Role"

}

resource "aws_ecr_repository" "ECRRepository" {

name = "cicd-example"

}

resource "aws_ecs_cluster" "ECSCluster" {

name = var.cluster_name

capacity_providers = [aws_ecs_capacity_provider.ecs_capacity_provider.name]

default_capacity_provider_strategy {

capacity_provider = aws_ecs_capacity_provider.ecs_capacity_provider.name

weight = 1

base = 1

}

}

resource "aws_iam_policy" "ecs_logs_policy" {

name = "ecsLogsPolicy"

description = "Allow ECS tasks to interact with CloudWatch Logs"

policy = jsonencode({

Version = "2012-10-17",

Statement = [

{

Effect = "Allow",

Action = [

"logs:CreateLogStream",

"logs:PutLogEvents",

"logs:CreateLogGroup",

"logs:DescribeLogStreams"

],

Resource = "arn:aws:logs:*:*:*"

}

]

})

}

resource "aws_iam_policy_attachment" "ecs_logs_policy_attachment" {

name = "ecs-logs-policy-attachment"

roles = [aws_iam_role.ecsTaskExecutionRole.name]

policy_arn = aws_iam_policy.ecs_logs_policy.arn

}

resource "aws_iam_role" "ecsTaskExecutionRole" {

name = "ecsTaskExecutionRole"

assume_role_policy = jsonencode({

Version = "2012-10-17",

Statement = [

{

Effect = "Allow",

Principal = {

Service = "ecs-tasks.amazonaws.com"

},

Action = "sts:AssumeRole"

}

]

})

}

resource "aws_iam_role_policy_attachment" "ecsTaskExecutionRole_policy" {

role = aws_iam_role.ecsTaskExecutionRole.name

policy_arn = "arn:aws:iam::aws:policy/service-role/AmazonECSTaskExecutionRolePolicy"

}

resource "aws_ecs_task_definition" "ECSTaskDefinition" {

family = "example-react-project"

execution_role_arn = aws_iam_role.ecsTaskExecutionRole.arn

network_mode = "bridge"

requires_compatibilities = ["EC2"]

cpu = "1024"

memory = "512"

container_definitions = templatefile("container_definitions.json.tpl", {

account_id = data.aws_caller_identity.current.account_id

})

}

resource "aws_ecs_service" "ECSService" {

name = "react-service"

cluster = aws_ecs_cluster.ECSCluster.arn

task_definition = aws_ecs_task_definition.ECSTaskDefinition.arn

desired_count = 2

deployment_maximum_percent = 200

deployment_minimum_healthy_percent = 100

scheduling_strategy = "REPLICA"

load_balancer {

target_group_arn = aws_lb_target_group.exampleTG.arn

container_name = "react-container"

container_port = 80

}

}

resource "aws_ecs_capacity_provider" "ecs_capacity_provider" {

name = "EC2CapacityProvider"

auto_scaling_group_provider {

auto_scaling_group_arn = aws_autoscaling_group.AutoScalingGroup.arn

managed_scaling {

maximum_scaling_step_size = 1

minimum_scaling_step_size = 1

status = "ENABLED"

target_capacity = 100

}

}

}

And the container definition:

container_definition.json.tpl

[

{

"name": "react-container",

"image": "${account_id}.dkr.ecr.us-east-1.amazonaws.com/cicd-example:main",

"cpu": 0,

"portMappings": [

{

"containerPort": 80,

"hostPort": 0,

"protocol": "tcp"

}

],

"essential": true,

"environment": [],

"mountPoints": [],

"volumesFrom": [],

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-create-group": "true",

"awslogs-group": "/ecs/example-react-project",

"awslogs-region": "us-east-1",

"awslogs-stream-prefix": "ecs"

}

}

}

]

The above terraform templates represent the manual steps you've done before.

Because AWS does a lot of wiring automatically in the console, it's not required to do there, however for TF templates it isn't the case, hence the lengthy configuration.

One more thing. Before you deploy the ECS service, make sure you run your bitbucket pipeline, otherwise it won't deploy.

4. Next steps

So far you should have an architecture that deploys your code fine to ECR, however it doesn't yet re-trigger the deploy step in ECS.

An easy way to get around this issue is to trigger a re-deploy via this bitbucket pipe:

https://bitbucket.org/product/features/pipelines/integrations?search=ecs&p=atlassian/aws-ecs-deploy

you can simple add a few new lines to your bitbucket-pipelines.yaml file:

- pipe: atlassian/aws-ecs-deploy:1.12.1

variables:

AWS_ACCESS_KEY_ID: $AWS_ACCESS_KEY_ID

AWS_SECRET_ACCESS_KEY: $AWS_SECRET_ACCESS_KEY

AWS_DEFAULT_REGION: "us-east-1"

CLUSTER_NAME: "cicd-cluster"

SERVICE_NAME: "react-service"

FORCE_NEW_DEPLOYMENT: "true"

on every push now, the service will redeploy:

There you go! You now have a very basic pipeline that deploys to ECS.

Needless to say, please don't use this in production, as this isn't meant for that. This is meant to show how you could do the same, and provides a baseline you can improve later on.